The popular monitoring software Prometheus has recently introduced (from 2.6) new knobs which regulate how many queries can be executed concurrently.

There are even the same knobs for different interfaces. Here they are with their default values:

- –storage.remote.read-concurrent-limit=10

- –query.max-concurrency=20

The latter is an upper-bound value to the former option. However, the question is:

How do you choose sensible values for them?

Well, I think I have the answer.

The number should be picked such that it does not exceed the number of threads of execution on your (virtual) machine. Ideally, it should be a bit lower because if your machine will encounter huge queries, it is (probably) going to also use the CPU for other operations such as sending the packets over a network.

I recently noticed empirically that executing a “huge” (let’s not delve into the definition here) query makes my Prometheus Docker container start using just a bit more than 100% of the CPU. This gave me an idea for this blog post.

Go has a concept called goroutines which are light-weight threads that are run later on “real” threads. Also, a single goroutine is an indivisible unit of work that can be scheduled only on one thread of execution at any time. So, the question becomes: is more than one goroutine spawned during the parsing of a query?

Let’s delve into the code. We will go bottom-up: we are going to work our way upwards. Sorry if I will miss some kind of detail – you can find all of the exact information in the source code.

Prometheus has a type v1.API which handles the HTTP calls. The api.queryRange function gets spawned in a fresh, new goroutine which handles the request and returns the result. The API type itself has a member called QueryEngine which handles the lifetime of queries from the beginning till the end, and it is connected to a querier which queries the underlying TSDB.

In that function, a new range query using that querying engine is created with NewRangeQuery and then the Exec method is called on it which actually does the query. A context is passed to it which is used to limit the amount of time that it can take to perform the query.

For better or worse, the Prometheus code has a lot of types. Thus, to avoid blowing this post out of proportion and just copying, and pasting the source code, I will sum things up.

It trickles down to selecting blocks according to the specified time range. Then the blocks which are in that range are iterated over and a NewBlockQuerier is created, and then they are joined into a bigger querier which is returned for evaluating the expression that was passed. For the write-ahead-log, a segments querier is created which handles the queries that touch the WAL.

With remote read, it is a bit different. That mechanism employs what is called a fanout type which implements the queryable interface. In essence, it sends those queries to other Prometheus instances and merges them. Here, it may be that more goroutines are spawned but they are not performing much of active work – only sending a query and waiting for a result – thus we will not count them. The same principle of passing a context everywhere is used which limits the amount of time it can take.

The comment in prometheus/tsdb/db.go:801says:

// Querier returns a new querier over the data partition for the given time range.// A goroutine must not handle more than one open Querier.

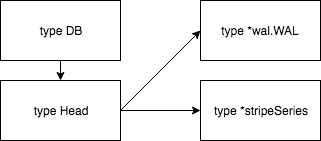

DB has a member of type Head which consequently has a member of type *wal.WAL (new data that was persisted on the disk) and *stripeSeries (new data that is still on RAM with some optimizations to avoid more lock contention).

Delete() are not called. Clever usage of RWMutex permits for it to work that way.

2 thoughts on “Choosing Maximum Concurrent Queries in Prometheus Smartly”