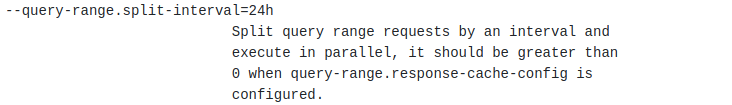

I ran into an interesting problem lately with the query-frontend component of Thanos. Here is how --query-range.split-interval is described in the --help output:

Computer Science is full of trade-offs and this is one of them. Currently, the Prometheus engine executes each query in a separate goroutine as I have described here some time ago. This mechanism lets you kind of work around this problem because each PromQL query only looks for samples in the given range (unless you use the negative offset feature flag). So, if the split interval is lower then you will be able to leverage the CPU power more but with more round-trips for retrieving the same data. If the split interval is higher, then fewer round-trips will be needed to execute those queries but fewer CPU cores will be used.

Thus, it might make sense to reduce this interval to a very small value, especially if you are running in a data-center environment where network latency is very small and there is no cost associated with retrieving data from remote object storage. Unfortunately, but it is not so easy because there are some small problems with that :/

- Thanos at the moment doesn’t support single-flight for index cache lookups. So, with a very small split interval, you might kill your Memcached with a ton of GET commands because each request will try to look up the same keys. This is especially apparent with compacted blocks where time-series typically span more than one day in a block;

- Another thing is that it might lead to a retrieval of more data than is actually needed. A series in Thanos (and Prometheus) spans from some minimum timestamp to some maximum timestamp. During compaction, multiple series with identical labels are joined together in a new, compacted block. But, if it has some gaps or we are interested only in some data (which is the case most of the time, in my humble opinion) then performing such time range checks is only possible after decoding the data. Thus, we might be forced to retrieve some bigger part of the chunk than it might be really needed with a small split interval. Again, this is more visible with compacted blocks because their series usually span bigger time ranges.

So, given all of this, what’s the most optimal split interval? It should be around our typical block size but not too small so as to avoid the mentioned issues. The possible block sizes are defined here in Thanos. Typically if the Thanos Compactor component is working then you will get block sizes of 2 days after they have been for around 2 days in remote object storage. But, what about the even bigger blocks which are 14 days in length? I think that at that point the downsampling starts playing a part in this and we should not be really concerned with the aforementioned problems anymore.

In conclusion, I think most of the time you will want the split interval to be either 24h or 48h – the former if your queries most of the time are computation-heavy so that you could use more CPU cores, the latter if your queries are retrieval-heavy so that you could avoid over-fetching data and sending more retrieve/store operations than needed.

Please let me know if you have spotted any problems or if you have any suggestions! I am always keen to hear from everyone!