Have you ever tried to ls the Prometheus TSDB data directory just to understand what kind of data you have from the perspective of time? Here is an example listing:

drwxrwxr-x 3 gstatkevicius gstatkevicius 4096 Jun 26 2020 01EBRP7DA9N67RTT9AFE0KMXFJ drwxrwxr-x 3 gstatkevicius gstatkevicius 4096 Jun 26 2020 01EBRP7DCM68X5SPQGK3T8NNER drwxrwxr-x 2 gstatkevicius gstatkevicius 4096 Jun 26 2020 chunks_head -rw-r--r-- 1 gstatkevicius gstatkevicius 0 Apr 17 2020 lock -rw-r--r-- 1 gstatkevicius gstatkevicius 20001 Jan 2 14:01 queries.active drwxr-xr-x 3 gstatkevicius gstatkevicius 4096 Jan 2 14:01 wal

We could assume that each block is 2 hours long. However, they can be compacted i.e. “joined” into bigger ones if that is enabled. Then, the blocks would not line up in a sequential way. Plus, this output of ls doesn’t even show the creation time of different blocks. We could theoretically get those timestamps with stat(2), statx(2) and some shell magic but that’s cumbersome. Overall, this doesn’t seem too helpful and we can do better.

Enter Thanos. I will not go over what it is in detail in this article to keep it terse however I am going to say that it extends Prometheus capabilities and lets you store TSDB blocks in remote object storage such as Amazon’s S3. Thanos has a component called the bucket block viewer. Even though it ordinarily only works with data in remote object storage but we can make it work with local data as well by using the FILESYSTEM storage type.

To use Thanos to visualize our local TSDB, we have to pass an object storage configuration file. If your Prometheus stores data in, let’s say, /prometheus/data then the --objstore.config-file needs to point to a file that has the following content:

type: FILESYSTEM config: directory: "/prometheus/data"

To start up the web interface for the storage bucket, execute the following command:

thanos tools bucket web --objstore.config-file=my-config-file.yaml

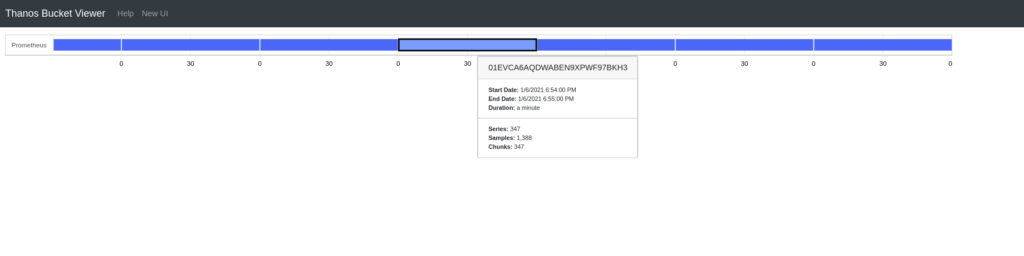

The web UI becomes available on http://localhost:10902. Here is how it looks like on a very simple (where all of the blocks are contiguous and of the same compaction level) TSDB:

Feel free to use Thanos as a way to explore your Prometheus! The support for viewing vanilla Prometheus blocks has been merged on January 10th, 2021 so only new versions after this date will have this functionality. So, please mind this date and if this does not work for you then please update your Thanos.