Just like most pieces of software nowadays, Thanos is not an exception and there is some caching going on there. In particular, we will talk about the index cache and its’ size in Thanos Store. After a certain bug was fixed, a lot of problems came up to users who were running with the default size of 200MiB. This is because this limit started being enforced whereas it was not before.

I feel that it would be the perfect opportunity to explain how it works and how to determine what would be the appropriate size in your deployment.

Modus Operandi

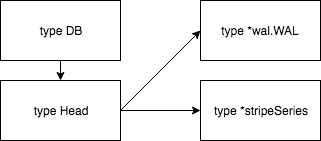

Thanos Store, on a user’s request, needs to go into the configured remote storage and retrieve the data that it needs to fulfill that query. However, how does it know what samples to retrieve? The answer is index files. Just like the TSDB used on Prometheus, it needs the index files to know where to get the relevant data to execute a user’s Series() call.

There are two types of items stored in that cache: postings and series. You can find all of the detailed information here however let me sum it up in this post.

So, first of all, we need to find out in which series we will find data that contain a given label pair. This is what postings give us.

Now… what is the series data? If you have ever seen how the TSDB looks like on disk, you might have seen that there is a directory called chunks. That is where the actual series data lays… however, how do we know what is in there? That is where the series data in the index files come in. It contains a bunch of information about where to find it like: chunks count, references to data, minimum and maximum time, et cetera.

Thus, to avoid constantly looking up the same data in the indices if we are refreshing the same dashboard in Grafana, an index cache was added to Thanos Store. It saves a ton of requests to the underlying remote storage.

How do we know that it is working, though? Let’s continue on to the next section…

Available metrics

thanos_store_index_cache_items_added_total– total number of items that were added to the index cache;thanos_store_index_cache_items_evicted_total– total number of items that were evicted from the index cache;thanos_store_index_cache_requests_total– total number of requests to the cache;thanos_store_index_cache_items_overflowed_total– total number of items that could not be added to the cache because they were too big;-

thanos_store_index_cache_hits_total– total number of times that the cache was hit; thanos_store_index_cache_items– total number of items that are in the cache at the moment;thanos_store_index_cache_items_size_bytes– total byte size of items in the cache;thanos_store_index_cache_total_size_bytes– total byte size of keys and items in the cache;thanos_store_index_cache_max_size_bytes– a constant metric which shows the maximum size of the cache;thanos_store_index_cache_max_item_size_bytes– a constant metric which shows the maximum item size in the cache

As you can see, that’s a lot to take in. But, it is good news since we know a lot about the current state of it at any time.

Before this bug was fixed in 0.3.2, you would have been able to observe that thanos_store_index_cache_items_evicted_total was mostly always 0 because the current size of the index cache was not being increased when adding items. Thus, the only time we would have evicted anything from the cache is when this huge, internal limit was hit.

Obviously, this means that back in the day RAM usage was growing boundlessly and users did not run into this problem because we were practically caching everything. That is not the case anymore.

Currently, to some users, the issue of a too small index cache size manifests as the number of goroutines growing into the tens of thousands when a request comes in. This happens because each different request goes into its own goroutine and we need to retrieve a lot of postings and series data if the request is asking for a relatively big amount of data, and it is not in the cache (thanos_store_index_cache_hits_total is relatively small compared to thanos_store_index_cache_requests_total).

Determining the appropriate size

So, let’s get to the meat of the problem: if the default value of 200MiB is giving you problems then how do you select a value that is appropriate for your deployment?

Just like with all caches, we want it to be as hot as possible – that means we should almost always practically hit it. You should check if in your current deployment thanos_store_index_cache_hits_total is only a bit lower than thanos_store_index_cache_requests_total. Depending on the number of requests coming in, the difference might be bigger or lower but it should still be close enough. Different sources show different numbers but the hit ratio ideally should be around 90% but lower values like 50 – 60 % are acceptable as well.

Theoretically, you could take the average size of the index files and figure out how many of them you would want to hold in memory. Then multiply those two and specify it as --index-cache-size (we will be able to hold even more series and postings data since the index files contain other information).

Next thing to look at is the difference between thanos_store_index_cache_items_added_total and thanos_store_index_cache_items_evicted_total in some kind of time window. Ideally, we should aim to avoid the situation where we are constantly adding and removing items from the cache. Otherwise, it will lead us to cache thrashing and we might see that Thanos Store is not performing any kind of useful work and that the number of goroutines is constantly high (in the millions). Please note that the latter metric is only available from 0.4.

Another metric which could indicate problems is thanos_store_index_cache_items_overflowed_total. It should never be more than 0. Otherwise, it means that either we tried to add an item which by itself was too big for the cache, or we had to remove more than saneMaxIterations items from the cache, or we had removed everything and it still cannot fit. It mostly only happens when there is huge index cache pressure and it indicates problems if it is more than 0. To fix it, you need to increase the index cache size.

Finally, please take a look at the query timings of requests coming into your deployment. If it takes more than 10 seconds to open up a dashboard in Grafana with 24 hours of data then it most likely indicates problems with this too.

Lastly, let me share some numbers. On one of my deployments, there are about ~20 queries coming in every second. Obviously, it depends on the nature of those queries but having an index cache of 10GB size makes it last for about a week before we hit the limit and have to start evicting some items from it. With such size, the node works very smoothly.