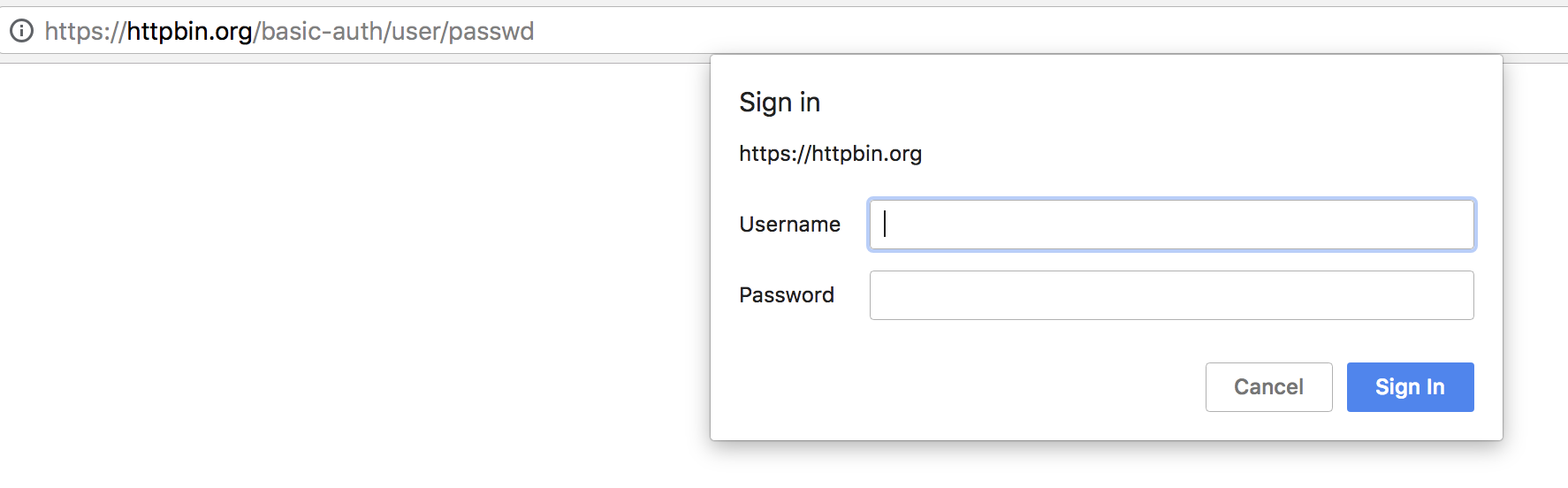

Out there, in the wild exists a lot of different authentication schemes or methods however one of them which is relatively popular because it’s implemented in most popular browsers at the moment, has this one peculiar “bug” in its specification – you cannot use a colon (‘:’) in the username field. If you have ever seen a window such as this:

Then that website is probably using HTTP basic auth and you must not use a colon in your username on that site because simply you would not be able to do that.

Why, you might ask? Well, simply because in that authentication scheme a colon is used to separate the username from the password. If you used a colon in your username, the HTTP server would not be able to discern between the username and the password because it is transmitted to it in this format with this scheme: username:password.

This scheme is defined in RFC 7617 and RFC 2617. As it says in the RFCs themselves:

Furthermore, a user-id containing a colon character is invalid, as the first colon in a user-pass string separates user-id and password from one another; text after the first colon is part of the password. User-ids containing colons cannot be encoded in user-pass strings.

That is an excerpt from RFC 7617. This is from RFC 2617:

To receive authorization, the client sends the userid and password, separated by a single colon (":") character, within a base64 encoded string in the credentials.

As you can see for yourself, the older version of this RFC (2617 is from June, 1999 whereas 7617 is from September, 2015) does not explicitly state that it is impossible to use a colon with this scheme however it is implicitly stated.

You might be surprised but a lot of software gets this wrong. For example, I recently looked into using ml2grow/GAEPyPI for running a simple PyPI on Google App Engine to reduce the costs. The code is all dandy and nice however the username and password parsing is a bit broken. It all happens here:

(username, password) = base64.b64decode(auth_header.split(' ')[1]).split(':')

As you can see, this code breaks a bit when .split(':') returns more than two results – when the username or password field contains a colon itself. This could be mitigated by using the first result as the username, and by concatenating all following results into a single string which would be used as the password. I will open a pull request soon to fix this issue. There are probably many more examples such as this.

As far as I know, we can only postulate about why this decision was made. My first thought was that maybe because Internet and computers were not so fast back in June, 1999, the people who made RFC 2617 decided to put it all into one field. This could have been easily remediated by having two separate fields for username and password. Perhaps this would have been too costly? I do not know.

Do you know of any other historical “bugs” in widely used specifications nowadays? Also, maybe you know what might be the reasons why this RFC was made in this way? Please let everyone know in the comments section down below. Thanks for reading and happy hacking!