During the most recent LFX mentorship program’s iteration, I had the honor to work on trying to migrate to version 2 of the protobuf API from gogoprotobuf on the Thanos project with my one and only awesome mentee Rahul Sawra and another mentor Lucas Serven who is also a co-maintainer of Thanos. I wanted to share my technical learnings from this project.

First of all, let’s quickly look at what protocol buffers are and what is the meaning of the different words in the jargon. Protocol buffers are a way of serializing data. It was first made by Google. It is a quite popular library that is used by Thanos really everywhere. Thanos also uses gRPC to talk between different components. gRPC is a remote procedure call framework. With it, your (micro-)services can implement methods that could be called by others.

Since both were made by Google originally, it is not surprising that gRPC is most commonly used with protocol buffers even though there is no critical dependency between them.

gogoprotobuf is a fork of the original protocol buffers compiler for Go that has (had?) some improvements over the old one. However, it comes not without some downsides. We’ve accumulated random hacks overtime to make generated code compile and work. For example, we edit import statements with sed. This looks like an opportunity for improving code generation tools – perhaps more checks are needed? What’s more, it turns the whole code generation into a “house of cards” – remove one small part and the whole thing crumbles. But, on the other hand, it is not surprising that an unmaintained tool has a bug here and there.

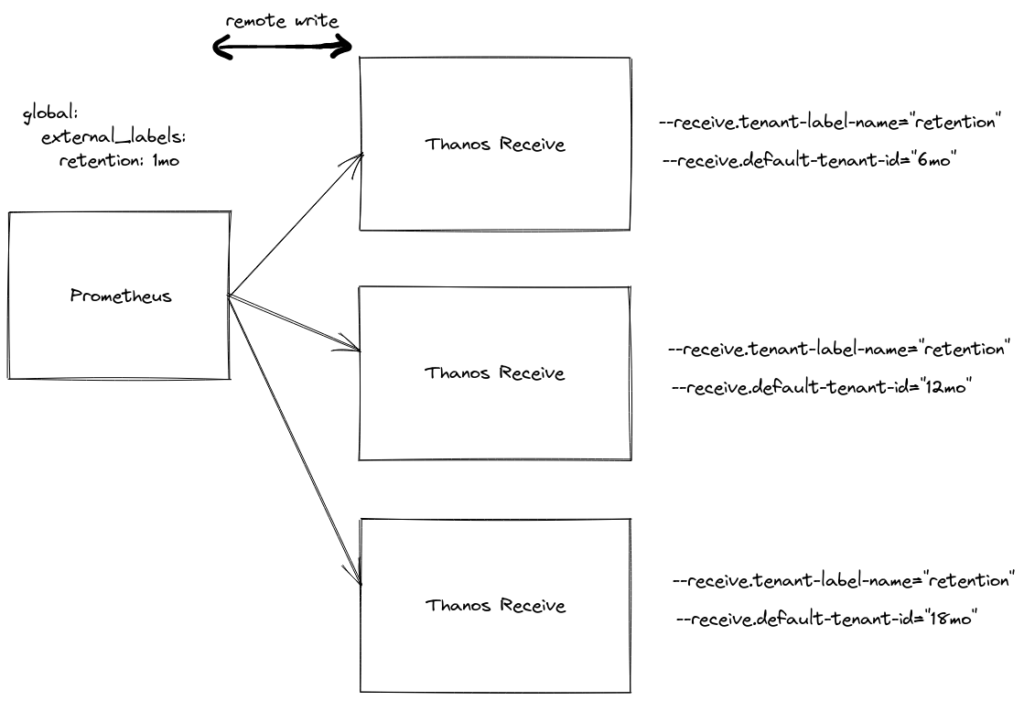

Thanos started using gogoprotobuf at some time in the past. But, after some time it became unmaintained. At some point, the fine Vitess folk came up with their own protocol buffers compiler for the V2 API which has some nice optimizations that bring it up to par with the old gogoprotobuf performance. In addition, it has support for pooling memory on the unmarshaling side i.e. the receiver. The sender’s side still, unfortunately, cannot use pooling because gRPC SendMsg() method returns before the message gets on the wire. I feel like it’s a serious performance issue and I’m surprised that the gRPC developers still haven’t fixed this problem. This is the first learning that I wanted to share.

Another thing is about copying generated code. Sometimes the generated code is not perfect. So, the easiest and most straightforward way to fix this issue is to copy the generated code, change the parts that you don’t like, and commit it to Git. However, that is certainly far from perfect. We have made this mistake in the Thanos project. We’ve copied a generated struct and its methods to another file, and added our optimization. We call it the ZLabelSet. Here is its code. As you can see, it is an optimization to avoid an allocation. However, in this way, the struct members of generated code became deeply coupled with the rest of this custom code. Now it becomes much more painful to change the types of those members which kind of became an interface – this is because the v2 API does not support non-nullable struct members.

On the other hand, using interfaces in Go incurs extra performance costs so don’t try to optimize too heavily. Profile and always pick your battles.

This is the second lesson. Please try to not copy generated code and instead make your own protocol buffers compiler plugin or something. It is actually quite easy to do so.

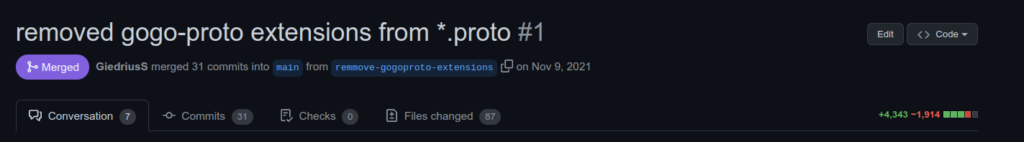

Last but not least, I also wanted to talk about goals and focus. Ever since we’ve divided the whole project into as many small parts as possible, the main focus was on getting the existing test suite to pass successfully. However, that is not always the best idea. We ran into a problem where gogoprotobuf has an extension to use a more natural type for Go programmers in structs – time.Time, alas the same extension doesn’t exist in vanilla protocol buffers for Go. It has its own separate type – protobuf.Timestamp. Because the usage of timestamps is littered all over the Thanos codebase, we’ve run into a problem where we’ve accidentally defined a bunch of conversion functions between those two types. And they weren’t identical. So, we had to take a step back and look at the invariants. To be more exact time.Time defines an absolute time whereas protobuf.Timestamp stores the time passed since Unix epoch 0. Only after unifying the conversion functions, does everything work correctly. Keep in mind that those “small” parts of this project are thousand of lines added or removed so it’s really easy to get lost. For example, this is one pull request that got merged:

In conclusion, the third, more general learning is that sometimes it is better to take a step back and to look at how everything should work together instead of being fixated on one small part.

Perhaps in the future code generation will be replaced in some part by generics in Go 1.18 and future Go versions. That should make life easier. I also hope that we will pick up this work again soon and that I will be able to announce to everyone that we finally switched to the upstream version of the protocol buffers for Go. It seems like there is an appetite for that in our community so the future looks bright. We’ve already removed the gogoproto extensions from our .proto files and we are in the middle of removing the gogoproto compiler – https://github.com/rahulii/thanos/pull/2. Just need someone to finish all of this up. And to start using the pooling functionality in Thanos Query. Will it be you who will help us finish this work? 😍🍻